Portal maintenance status: (September 2019)

|

The Computer Programming Portal

Computer programming or coding is the composition of sequences of instructions, called programs, that computers can follow to perform tasks. It involves designing and implementing algorithms, step-by-step specifications of procedures, by writing code in one or more programming languages. Programmers typically use high-level programming languages that are more easily intelligible to humans than machine code, which is directly executed by the central processing unit. Proficient programming usually requires expertise in several different subjects, including knowledge of the application domain, details of programming languages and generic code libraries, specialized algorithms, and formal logic.

Auxiliary tasks accompanying and related to programming include analyzing requirements, testing, debugging (investigating and fixing problems), implementation of build systems, and management of derived artifacts, such as programs' machine code. While these are sometimes considered programming, often the term software development is used for this larger overall process – with the terms programming, implementation, and coding reserved for the writing and editing of code per se. Sometimes software development is known as software engineering, especially when it employs formal methods or follows an engineering design process. (Full article...)

Selected articles - load new batch

-

Image 1In C++ computer programming, allocators are a component of the C++ Standard Library. The standard library provides several data structures, such as list and set, commonly referred to as containers. A common trait among these containers is their ability to change size during the execution of the program. To achieve this, some form of dynamic memory allocation is usually required. Allocators handle all the requests for allocation and deallocation of memory for a given container. The C++ Standard Library provides general-purpose allocators that are used by default; however, custom allocators may also be supplied by the programmer.

Allocators were invented by Alexander Stepanov as part of the Standard Template Library (STL). They were originally intended as a means to make the library more flexible and independent of the underlying memory model, allowing programmers to utilize custom pointer and reference types with the library. However, in the process of adopting STL into the C++ standard, the C++ standardization committee realized that a complete abstraction of the memory model would incur unacceptable performance penalties. To remedy this, the requirements of allocators were made more restrictive. As a result, the level of customization provided by allocators is more limited than was originally envisioned by Stepanov.

Nevertheless, there are many scenarios where customized allocators are desirable. Some of the most common reasons for writing custom allocators include improving performance of allocations by using memory pools, and encapsulating access to different types of memory, like shared memory or garbage-collected memory. In particular, programs with many frequent allocations of small amounts of memory may benefit greatly from specialized allocators, both in terms of running time and memory footprint. (Full article...) -

Image 2

In computing, assembly language (alternatively assembler language or symbolic machine code), often referred to simply as assembly and commonly abbreviated as ASM or asm, is any low-level programming language with a very strong correspondence between the instructions in the language and the architecture's machine code instructions. Assembly language usually has one statement per machine code instruction (1:1), but constants, comments, assembler directives, symbolic labels of, e.g., memory locations, registers, and macros are generally also supported.

The first assembly code in which a language is used to represent machine code instructions is found in Kathleen and Andrew Donald Booth's 1947 work, Coding for A.R.C.. Assembly code is converted into executable machine code by a utility program referred to as an assembler. The term "assembler" is generally attributed to Wilkes, Wheeler and Gill in their 1951 book The Preparation of Programs for an Electronic Digital Computer, who, however, used the term to mean "a program that assembles another program consisting of several sections into a single program". The conversion process is referred to as assembly, as in assembling the source code. The computational step when an assembler is processing a program is called assembly time.

Because assembly depends on the machine code instructions, each assembly language is specific to a particular computer architecture such as x86 or ARM. (Full article...) -

Image 3

R is a programming language for statistical computing and data visualization. It has been widely adopted in the fields of data mining, bioinformatics, data analysis, and data science.

The core R language is extended by a large number of software packages, which contain reusable code, documentation, and sample data. Some of the most popular R packages are in the tidyverse collection, which enhances functionality for visualizing, transforming, and modelling data, as well as improves the ease of programming (according to the authors and users).

R is free and open-source software distributed under the GNU General Public License. The language is implemented primarily in C, Fortran, and R itself. Precompiled executables are available for the major operating systems (including Linux, MacOS, and Microsoft Windows). (Full article...) -

Image 4Java is a high-level, general-purpose, memory-safe, object-oriented programming language. It is intended to let programmers write once, run anywhere (WORA), meaning that compiled Java code can run on all platforms that support Java without the need to recompile. Java applications are typically compiled to bytecode that can run on any Java virtual machine (JVM) regardless of the underlying computer architecture. The syntax of Java is similar to C and C++, but has fewer low-level facilities than either of them. The Java runtime provides dynamic capabilities (such as reflection and runtime code modification) that are typically not available in traditional compiled languages.

Java gained popularity shortly after its release, and has been a popular programming language since then. Java was the third most popular programming language in 2022[update] according to GitHub. Although still widely popular, there has been a gradual decline in use of Java in recent years with other languages using JVM gaining popularity.

Java was designed by James Gosling at Sun Microsystems. It was released in May 1995 as a core component of Sun's Java platform. The original and reference implementation Java compilers, virtual machines, and class libraries were released by Sun under proprietary licenses. As of May 2007, in compliance with the specifications of the Java Community Process, Sun had relicensed most of its Java technologies under the GPL-2.0-only license. Oracle, which bought Sun in 2010, offers its own HotSpot Java Virtual Machine. However, the official reference implementation is the OpenJDK JVM, which is open-source software used by most developers and is the default JVM for almost all Linux distributions. (Full article...) -

Image 5

tcsh and sh shell windows on a Mac OS X Leopard desktop

A "Unix shell" is a shell that provides a command-line user interface for a Unix-like operating system. A Unix shell provides a command language that can be used either interactively or for writing a shell script. A user typically works within a Unix shell via a terminal emulator; however, direct access via serial hardware connections or a Secure Shell are common for server systems. Although use of a Unix shell is popular with some users, others prefer to use a graphical shell in a windowing system, such as those provided in desktop Linux distributions or macOS, instead of a command-line interface (CLI).

A user may have access to multiple Unix shells with one configured to run by default when the user logs in interactively. The default selection is typically stored in a user's profile; for example, in the local passwd file or in a distributed configuration system such as NIS or LDAP. A user may use other shells nested inside the default shell.

A Unix shell may provide many features including: variable definition and substitution, command substitution, filename wildcarding, stream piping, control flow structures (condition-testing and iteration), working directory context, and here document. (Full article...) -

Image 6

Stephen Gary Wozniak (/ˈwɒzniæk/; born August 11, 1950), also known by his nickname Woz, is an American technology entrepreneur, electrical engineer, computer programmer, and inventor. In 1976, he co-founded Apple Computer with his early business partner Steve Jobs. Through his work at Apple in the 1970s and 1980s, he is widely recognized as one of the most prominent pioneers of the personal computer revolution.

In 1975, Wozniak started developing the Apple I into the computer that launched Apple when he and Jobs first began marketing it the following year. He was the primary designer of the Apple II, introduced in 1977, known as one of the first highly successful mass-produced microcomputers, while Jobs oversaw the development of its foam-molded plastic case and early Apple employee Rod Holt developed its switching power supply.

With human–computer interface expert Jef Raskin, Wozniak had a major influence over the initial development of the original Macintosh concepts from 1979 to 1981, when Jobs took over the project following Wozniak's brief departure from the company due to a traumatic airplane accident. After permanently leaving Apple in 1985, Wozniak founded CL 9 and created the first programmable universal remote, released in 1987. He then pursued several other business and philanthropic ventures throughout his career, focusing largely on technology in K–12 schools, which involved a 1990 initiative to place computers in schools in the former Soviet Union. (Full article...) -

Image 7In computing, a compiler is software that translates computer code written in one programming language (the source language) into another language (the target language). The name "compiler" is primarily used for programs that translate source code from a high-level programming language to a low-level programming language (e.g. assembly language, object code, or machine code) to create an executable program.

There are many different types of compilers which produce output in different useful forms. A cross-compiler produces code for a different CPU or operating system than the one on which the cross-compiler itself runs. A bootstrap compiler is often a temporary compiler, used for compiling a more permanent or better optimized compiler for a language.

Related software include decompilers, programs that translate from low-level languages to higher level ones; programs that translate between high-level languages, usually called source-to-source compilers or transpilers; language rewriters, usually programs that translate the form of expressions without a change of language; and compiler-compilers, compilers that produce compilers (or parts of them), often in a generic and reusable way so as to be able to produce many differing compilers. (Full article...) -

Image 8

PDP-11 CPU board

Computer hardware includes the physical parts of a computer, such as the central processing unit (CPU), random-access memory (RAM), motherboard, computer data storage, graphics card, sound card, and computer case. It includes external devices such as a monitor, mouse, keyboard, and speakers.

By contrast, software is a set of written instructions that can be stored and run by hardware. Hardware derived its name from the fact it is hard or rigid with respect to changes, whereas software is soft because it is easy to change.

Hardware is typically directed by the software to execute any command or instruction. A combination of hardware and software forms a usable computing system, although other systems exist with only hardware. (Full article...) -

Image 9Computer programming or coding is the composition of sequences of instructions, called programs, that computers can follow to perform tasks. It involves designing and implementing algorithms, step-by-step specifications of procedures, by writing code in one or more programming languages. Programmers typically use high-level programming languages that are more easily intelligible to humans than machine code, which is directly executed by the central processing unit. Proficient programming usually requires expertise in several different subjects, including knowledge of the application domain, details of programming languages and generic code libraries, specialized algorithms, and formal logic.

Auxiliary tasks accompanying and related to programming include analyzing requirements, testing, debugging (investigating and fixing problems), implementation of build systems, and management of derived artifacts, such as programs' machine code. While these are sometimes considered programming, often the term software development is used for this larger overall process – with the terms programming, implementation, and coding reserved for the writing and editing of code per se. Sometimes software development is known as software engineering, especially when it employs formal methods or follows an engineering design process. (Full article...) -

Image 10

W3sDesign Interpreter Design Pattern UML

In computing, an interpreter is software that executes source code without first compiling it to machine code. An interpreted runtime environment differs from one that processes CPU-native executable code which requires translating source code before executing it. An interpreter may translate the source code to an intermediate format, such as bytecode. A hybrid environment may translate the bytecode to machine code via just-in-time compilation, as in the case of .NET and Java, instead of interpreting the bytecode directly.

Before the widespread adoption of interpreters, the execution of computer programs often relied on compilers, which translate and compile source code into machine code. Early runtime environments for Lisp and BASIC could parse source code directly. Thereafter, runtime environments were developed for languages (such as Perl, Raku, Python, MATLAB, and Ruby), which translated source code into an intermediate format before executing to enhance runtime performance.

Code that runs in an interpreter can be run on any platform that has a compatible interpreter. The same code can be distributed to any such platform, instead of an executable having to be built for each platform. Although each programming language is usually associated with a particular runtime environment, a language can be used in different environments. Interpreters have been constructed for languages traditionally associated with compilation, such as ALGOL, Fortran, COBOL, C and C++. (Full article...) -

Image 11

Ruby is a general-purpose programming language. It was designed with an emphasis on programming productivity and simplicity. In Ruby, everything is an object, including primitive data types. It was developed in the mid-1990s by Yukihiro "Matz" Matsumoto in Japan.

Ruby is interpreted, high-level, and dynamically typed; its interpreter uses garbage collection and just-in-time compilation. It supports multiple programming paradigms, including procedural, object-oriented, and functional programming. According to the creator, Ruby was influenced by Perl, Smalltalk, Eiffel, Ada, BASIC, and Lisp. (Full article...) -

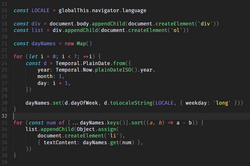

Image 12

JavaScript (JS) is a programming language and core technology of the Web, alongside HTML and CSS. It was created by Brendan Eich in 1995. As of 2025, the overwhelming majority of websites (98.9%) uses JavaScript on the client side for webpage behavior.

Web browsers have a dedicated JavaScript engine that executes the client code. These engines are also utilized in some servers and a variety of apps. The most popular runtime system for non-browser usage is Node.js.

JavaScript is a high-level, often just-in-time–compiled language that conforms to the ECMAScript standard. It has dynamic typing, prototype-based object-orientation, and first-class functions. It is multi-paradigm, supporting event-driven, functional, and imperative programming styles. It has application programming interfaces (APIs) for working with text, dates, regular expressions, standard data structures, and the Document Object Model (DOM). (Full article...) -

Image 13

Yoshua Bengio OC OQ FRS FRSC (born March 5, 1964) is a Canadian computer scientist, and a pioneer of artificial neural networks and deep learning. He is a professor at the Université de Montréal and co-president and scientific director of the nonprofit LawZero. He founded Mila, the Quebec Artificial Intelligence (AI) Institute, and was its scientific director until 2025.

Bengio received the 2018 ACM A.M. Turing Award, often referred to as the "Nobel Prize of Computing", together with Geoffrey Hinton and Yann LeCun, for their foundational work on deep learning. Bengio, Hinton, and LeCun are sometimes referred to as the "Godfathers of AI". Bengio is the most-cited computer scientist globally (by both total citations and by h-index), and the most-cited living scientist across all fields (by total citations). In November 2025, Bengio became the first AI researcher with more than a million Google Scholar citations. In 2024, TIME Magazine included Bengio in its yearly list of the world's 100 most influential people. (Full article...) -

Image 14

Swift is a high-level general-purpose, multi-paradigm, compiled programming language created by Chris Lattner in 2010 for Apple Inc. and maintained by the open-source community. Swift compiles to machine code and uses an LLVM-based compiler. Swift was first released in June 2014 and the Swift toolchain has shipped in Xcode since Xcode version 6, released in September 2014.

Apple intended Swift to support many core concepts associated with Objective-C, notably dynamic dispatch, widespread late binding, extensible programming, and similar features, but in a "safer" way, making it easier to catch software bugs; Swift has features addressing some common programming errors like null pointer dereferencing and provides syntactic sugar to help avoid the pyramid of doom. Swift supports the concept of protocol extensibility, an extensibility system that can be applied to types, structs and classes, which Apple promotes as a real change in programming paradigms they term "protocol-oriented programming" (similar to traits and type classes).

Swift was introduced at Apple's 2014 Worldwide Developers Conference (WWDC). It underwent an upgrade to version 1.2 during 2014 and a major upgrade to Swift 2 at WWDC 2015. It was initially a proprietary language, but version 2.2 was made open-source software under the Apache License 2.0 on December 3, 2015, for Apple's platforms and Linux. (Full article...) -

Image 15Information privacy is the relationship between the collection and dissemination of data, technology, the public expectation of privacy, contextual information norms, and the legal and political issues surrounding them. It is also known as data privacy or data protection. (Full article...)

Selected images

-

Image 1Ada Lovelace was an English mathematician and writer, chiefly known for her work on Charles Babbage's proposed mechanical general-purpose computer, the Analytical Engine. She was the first to recognize that the machine had applications beyond pure calculation, and to have published the first algorithm intended to be carried out by such a machine. As a result, she is often regarded as the first computer programmer.

-

Image 3Stephen Wolfram is a British-American computer scientist, physicist, and businessman. He is known for his work in computer science, mathematics, and in theoretical physics.

-

Image 4Partial map of the Internet based on the January 15, 2005 data found on opte.org. Each line is drawn between two nodes, representing two IP addresses. The length of the lines are indicative of the delay between those two nodes. This graph represents less than 30% of the Class C networks reachable by the data collection program in early 2005.

-

Image 5This image (when viewed in full size, 1000 pixels wide) contains 1 million pixels, each of a different color.

-

Image 6An IBM Port-A-Punch punched card

-

Image 7Margaret Hamilton standing next to the navigation software that she and her MIT team produced for the Apollo Project.

-

Image 8A view of the GNU nano Text editor version 6.0

-

Image 10Deep Blue was a chess-playing expert system run on a unique purpose-built IBM supercomputer. It was the first computer to win a game, and the first to win a match, against a reigning world champion under regular time controls. Photo taken at the Computer History Museum.

-

Image 11A lone house. An image made using Blender 3D.

-

Image 13Partial view of the Mandelbrot set. Step 1 of a zoom sequence: Gap between the "head" and the "body" also called the "seahorse valley".

-

Image 14Grace Hopper at the UNIVAC keyboard, c. 1960. Grace Brewster Murray: American mathematician and rear admiral in the U.S. Navy who was a pioneer in developing computer technology, helping to devise UNIVAC I. the first commercial electronic computer, and naval applications for COBOL (common-business-oriented language).

-

Image 16Output from a (linearised) shallow water equation model of water in a bathtub. The water experiences 5 splashes which generate surface gravity waves that propagate away from the splash locations and reflect off of the bathtub walls.

-

Image 17A head crash on a modern hard disk drive

-

Image 18GNOME Shell, GNOME Clocks, Evince, gThumb and GNOME Files at version 3.30, in a dark theme

Did you know? - load more entries

- ... that it took a particle accelerator and machine-learning algorithms to extract the charred text of PHerc. Paris. 4 without unrolling it?

- ... that a "hacker" with blog posts written by ChatGPT was at the center of an online scavenger hunt promoting Avenged Sevenfold's album Life Is but a Dream...?

- ... that Phil Fletcher as Hacker T. Dog caused Lauren Layfield to make the "most famous snort" in the United Kingdom in 2016?

- ... that both Thackeray and Longfellow bought paintings by Fanny Steers?

- ... that NATO was once targeted by a group of "gay furry hackers"?

- ... that the 2024 psychological horror game Mouthwashing utilises non-diegetic scene transitions that mimic glitches and crashes?

Subcategories

WikiProjects

- There are many users interested in computer programming, join them.

Computer programming news

- 28 October 2025 – AI boom, Workplace impact of artificial intelligence

- Multinational technology and e-commerce company Amazon announces it will lay off 14,000 corporate positions as it invests more in building AI and cloud computing infrastructure. (DW) (CNBC)

Topics

Related portals

Associated Wikimedia

The following Wikimedia Foundation sister projects provide more on this subject:

-

Commons

Free media repository -

Wikibooks

Free textbooks and manuals -

Wikidata

Free knowledge base -

Wikinews

Free-content news -

Wikiquote

Collection of quotations -

Wikisource

Free-content library -

Wikiversity

Free learning tools -

Wiktionary

Dictionary and thesaurus